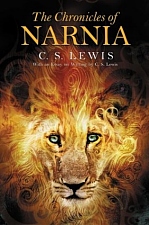

I recently finished C. S. Lewis' The Chronicles of Narnia. These fantastical stories of youth visiting other worlds, talking with animals, fighting battles, and solving riddles are truly entertaining. There is, however, a non-subtle message about faith embedded within the stories, especially in the last book, The Last Battle.

I recently finished C. S. Lewis' The Chronicles of Narnia. These fantastical stories of youth visiting other worlds, talking with animals, fighting battles, and solving riddles are truly entertaining. There is, however, a non-subtle message about faith embedded within the stories, especially in the last book, The Last Battle.

In The Last Battle, characters are presented with a false "god", and after realizing they were deceived, some give up altogether on the belief. These characters are punished in the end for this "treachery", while those characters who continued to believe in the real god are rewarded. This is a very common theme in literature, as well as many world religions.

But there is a stark contrast between the faith we read about in literature and the faith we practice in real life. I will argue that this contrast is used to mask the fact that we are all rational and skeptical. Belief is the core function, faith is simply an intermediary for evidence. Through a generalized literary discussion, I hope to show how faith is used to fill in the gap between what we want to believe and reality.[cut]

Figure 1: Archetypes of believer / non-believer in literature |

The chart here depicts the archetypes of believers and non-believers in stories. These general statements fit many stories of characters in the throws of faith and belief. Usually, their status as "Believer" or "Non-Believer" is very distinct, and little middle ground is provided. In The Last Battle, the dwarfs went from serving the false Anslan, (demigod of Narnia) to the tune of servitude and hard labor, to completely giving up on the idea of Anslan once it was proved they were deceived. In the rather poor movie adaptation/remaking of the Brother's Grim, one of the main characters refuses to believe in supernatural notions right until the end of the movie when the resolution of his "faith-full" brother impresses him. This despite the dramatic evidence presented to his character throughout the movie that there were supernatural occurrences.

This characterization of "black or white" faith is inconsistent with reality. Many religious people question their beliefs at some point. A constant debate has existed throughout history on the merits of religion, religious dogma, and morality. Pundits may pick a side to stand on, but the majority of practitioners do not accept the entirety of beliefs for any single religion.

There obviously must be some middle ground that allows us to accept major tenets of religions, while rationally rejecting other, less important ones. It is the attribution of importance to religious ideas that separates faiths.

Justifications

But the table above contains more rifts between literature and reality. My favorite is the justification. Most "non-believers" (i.e. atheists, humanists, agnostics, etc.) attribute their beliefs (or lack thereof) to missing evidence. The notion of evidence is prevalent in nearly every religion on earth. The Bible is presented as evidence for many Christian and Jewish faiths. There are also arguments about the inherent "designed" nature of the world around us, and the internal spiritual feeling and communion with god. But none of these, save the Bible, are actually evidence.

Evidence must be corroborated, objective, and impeachable. That is to say any particular piece of evidence must be shown to actually exist (corroborated), be something that multiple people can hold, measure, observe, or otherwise handle and reach the same conclusions (objective), and be used in a theory that can be proven false (impeachable). The following pieces of evidence fail one or more of these tests:

While alone in prayer, someone saw an apparition that communicated to him important information. If this event can not be corroborated by witnesses, recording devices, trace evidence (like a light-bleached floor with the shadow of the man praying or information about a true prophecy that comes true) then it is not evidence.

A religious person claims to feel god inside him. This may be a sincere claim, but it's not evidence, not even for the subject. If the same type of feeling can't be felt by others under controlled conditions, then there exist too many variables to make any judgments. In this example, the subject may be feeling sick, or drugged, or may by feeling something quite normal that has more to do with emotion than supernatural possession. [NOTE: There are personal feelings that CAN be considered objective. For example, the feeling we call love leaves trace patterns of electrical activity in the subject's brain. These trace patterns can be seen in the brains of different subjects who are asked to think about someone they love. From this evidence, we can conclude that there is a basis for our notion of love.]

An artifact is recovered in an archeological dig and said to be a position of a past deity. While many scientists can pick this up, play with it, study it, and otherwise violate it, this cannot be evidence of the deity's existence. There is no way to prove whether or not any specific object was used by any particular individual unless it is inscribed with their name (or has some other form of trace evidence). You cannot prove this statement false, thus it is not evidence. The same goes for "Unicorns exist, they are just hard to find" and "Popcorn is made of gold, but turns back to popcorn whenever you touch or look at it."

I mentioned the Bible before as an exception in the list of evidence for religious beliefs. The Bible is, by all counts, acceptable evidence. It can be held, studied, verified, and proven wrong. The accounts of tribes, battles and lineage in the Bible should, if true, should have other evidences to corroborate it's authenticity. In addition, the Bible makes claims that can be proven wrong. While for now I will simply state that in my research, the bible has been shown to be more a historical journal than a proof of any religious tenet, this website has plenty of information on it.

Given the above discussion on evidence, how do believers justify their convictions? Here, the literature is overwhelmingly hypocritical. Literature portrays the non-believer as ignorant, blind-to-evidence, and self-conceited. Characters are seen in a bad light for not having faith, but what is actually going on is these characters are rejecting actual evidence. If these worlds were the real world, there would be wide-spread belief based on this evidence. This does not fit with why people believe in reality.

In reality, most religious people cite personal conviction as a top justification for their belief. Additionally, evidence that is provided which conflicts with these beliefs is wholly rejected, without accounting for the merits of the claim. Lastly, most of the faithful study very little about other beliefs, not by ineptitude, but rather lack of desire. Why bother reading about someone else's claims if you already accept yours as true? We often, paradoxically, seek out opinions of those we already agree with, and accept opinions we agree with from anyone.

So, in the story the guy that rejects all the signs is seen as having no faith, and in reality the same guy is a rejecting fact. But also in the stories, the guy that believes on faith isn't actually using faith, he's simply accepting evidence. In reality, this person would be considered a skeptic or rationalist.

Status

Most literary characters that exhibit bravery, strong leadership, moral character, trustworthiness, or resiliency have these characteristics tied to a system of faith.

Most literary characters that exhibit bravery, strong leadership, moral character, trustworthiness, or resiliency have these characteristics tied to a system of faith.

In reality, these positive characteristics are not mutually inclusive with faith. Rather, there are many non-believers with strong moral character, are trustworthy, and make excellent leaders. Additionally, many individuals from dispirit faiths (like Buddhism and Islam) have these characteristics in common, unlike their faith. Thus, it would seem that what makes a good man (or woman) is not necessarily faith. A pity this simple fact is not written about

It's also worth noting that the lack of correlation between faith and character means that there are bad people on all sides of faith. The religious leaders who encouraged the crusades in the middle ages, radical Islamic followers who create violence, and Chinese armies that invaded Tibet all acted out of a sense of correctness. While we agree today that these acts should not represent the religions (or lack thereof) that their participants believed in, they would have, no doubt disagreed. It's just as much a fallacy to only call Christian those who act positively (excluding the crusaders) than it is to only call American those that vote. It's also unfair to judge Christianity by the crusades, just as it's unfair to judge America by the multitudes that don't vote.

Bottom line: Our actions are the results of personal decisions, environmental conditions, and a dash of chance. Faith no more affects our character than the way we were raised, the friends we had in high school, and choices we made at work. Only attributing positive characteristics to "faithful" characters in literature is prejudicial.

Motivation

| "Let us take things as we find them: let us not attempt to distort them into what they are not. We cannot make facts. All our wishing cannot change them. We must use them." - John Henry Cardinal Newman (1801 - 1890) |

While the faithful literary characters behave much like the objects of their creativity in the real world, portrayals of the non-believers are wholly unfair. A character that does not accept the "signs" or evidence of the faith in the story is seen as ignorant, dull, or simply disinterested. In some cases, the biblical "hardening of hearts" is used to justify a blatant disregard for witnessed events as evidence. Such narratives are added as a logical extension of the justifications. Remember, in literary stories, evidence of faith can be shown as booming voices from the sky, powerful apparitions, spine-tingling super-accurate prophecies, and more otherwise unexplainable events than you can shake a stick at. All this is made possible by the medium, which, facilitated by our comfort with creativity, can put together a world with completely different rules. While we may shrug at copious evidence at aliens in Roswell, literary worlds can have any evidence they need to make a point.

The result is a world in which the human experience is different. It would also make sense that our rules for interacting with it should change too, but this is not the case. Human characters must always seem human, they are our tie into these fanticiful worlds: without characters to identify with, we loose interest in the story. So how, exactly, is a human supposed to cope in a world full of supernatural events? Just like religions expect us to cope with our current world. The humanistic experiences remain the same.

In reality, a skeptic may make the claim that there is no direct evidence of God, and therefore does not believe. A religious retort may include citations of evidence for consideration, but let's face it, all evidence for God is either personal (not corroborated) or ambiguous (not objective). If this weren't the case, then religious stories would not need additional "evidence" to make their cases, such as the elaborate miracles in the gospels or unequivocally true evidence in religious movies. A skeptic is a fool in these created worlds because he amounts to holocaust-deniers, moon-landing stagers, and JFK assassination conspirators in our world. Few have tolerance for people who refuse to accept the obvious. If I existed in the world of Narnia, I would laugh at the dwarfs who refused to believe in Anslan and defiantly believe in him myself, not because of faith, but on the evidence.

In reality, a skeptic may make the claim that there is no direct evidence of God, and therefore does not believe. A religious retort may include citations of evidence for consideration, but let's face it, all evidence for God is either personal (not corroborated) or ambiguous (not objective). If this weren't the case, then religious stories would not need additional "evidence" to make their cases, such as the elaborate miracles in the gospels or unequivocally true evidence in religious movies. A skeptic is a fool in these created worlds because he amounts to holocaust-deniers, moon-landing stagers, and JFK assassination conspirators in our world. Few have tolerance for people who refuse to accept the obvious. If I existed in the world of Narnia, I would laugh at the dwarfs who refused to believe in Anslan and defiantly believe in him myself, not because of faith, but on the evidence.

This is an important point. In literature, skepticism and rationality are disguised with faith, and hypocrisy and conspiratorial thinking are disguised with skepticism. In reality, these things are not disguised- without evidence, you either reject (skepticism) or accept (faith).

Character Portrayal

SIDE BAR: The Big Ten The Ten Commandments are seen as a pinnacle of law and religious thought. A vocal minority claim that they are the basis for our modern law, and some even claim they are the basis for American law. Lets look at this claim. Here are the ten commandments. Of these ten, only two can be said to exist in American law, or any secular government for that matter (don't steal or kill). And even then, there are exceptions (corporal punishment, war, annexing). Additionally, would any American (religious or not) want the government to enforce any of remaining commandments? Do you want your government telling you what God to believe in, and outlawing any other beliefs? Do you want government punishing youth for disrespecting parents? Honestly, the ten commandments had their time, but no one wants them around in law today. |

Most people want to be on the "good side". Characteristics that universally seem good are bravery, wisdom, trustworthy, loyal, loving, etc. Of course anyone who opposes the good guys must be bad guys, these are the self-conceited, power-hungry, immoral characters. These categories work well for fictional stories, but in reality this is a false dichotomy.

Only in our stories are people either truly good or bad. Good and Evil are judgments, attributions given to people, objects, and events. This binary categorization is always performed in a social, political, and religious context. As the context changes, so do the attributions. For example, slavery was way of life in biblical days, but now it's strictly forbidden. What once was good, now is evil. Other examples include Ptolemy, heliocentrism, divorce, debt, underage marriage, poligamy, corporal punishment, mental diseases and disabilities, etc. There is no doubt that our concepts of good and evil change over time, making these not universal standards, but rather cultural appendages. See the side bar on the Ten Commandments for an excellent example.

To reiterate, literary worlds hold on tight to the dichotomy of good an evil, portraying the good guys as good and the bad guys as bad. Only in really good literature are allowances made for true humanity.

Resolution

Much of religious-like writing has an end in mind. The "faith" (remember, faith is an illusion in most literature, masking true skepticism and rationality) is payment for moving onto a better place. Those who do not believe get punished. The long journeys of trials and challenges test the characters' morals, standards, and values. In the end, the person has either proven themselves "worthy" or not. This, again, is a false portrayal of the human experience.

Every religion to exist has a way of dealing with "back-sliding", or temporary loss of faith. It is a fact that most human beings come to challenge their beliefs at some point in their lives. This is natural and good. Most religions accept this as part of a journey, a test. But you are always expected to return. And therein lays the fallacy. What if, after investigation, your original beliefs seem unreasonable? Those who hop from religion to religion are not seen as positive characters, but rather lost souls, troubled spirits, or blind wanderers.

Challenging personal beliefs has a negative stereotype, as should be expected. After all, it is very difficult to do so. As mentioned before, we often look for opinions that match or reinforce ours. It takes extenuating circumstances to make a true challenge. It often takes a change of environment, lots of new information, and time. So why aren't these journeys valued in literature? Where is the story about the boy who believed one thing, then after a long journey and self-inspection believed something else?

This, unfortunately, is a symptom of reality. Literature will resolve religious stories just like we do in real life, because in real life we like to feel like we were right all along. In every book of The Chronicles of Narnia, characters are challenged, become side tracked, but inevitable find themselves more resolute in their beliefs in the end. Those that change their minds (the dwarfs in The Last Battle) are lost fools.

Conclusions

Perhaps the saving grace is a much broader concept. That of belief itself. Having a center, a purpose, a reason and direction in life. This is the ultimate story which we live and write about. Without some sort of belief, we are truly lost. For it is in the warm bosom of belief that we find meaning in life. And meaning is what makes the sunrise beautiful, love powerful, and death scary. Science will never give us meaning, but it need not, for there is plenty of it to go around.

Perhaps the saving grace is a much broader concept. That of belief itself. Having a center, a purpose, a reason and direction in life. This is the ultimate story which we live and write about. Without some sort of belief, we are truly lost. For it is in the warm bosom of belief that we find meaning in life. And meaning is what makes the sunrise beautiful, love powerful, and death scary. Science will never give us meaning, but it need not, for there is plenty of it to go around.

Let's just keep some perspective though. Having a belief is not an inalienable right. Beliefs should be founded on as much evidence and fact as possible. This ensures that the meaning that comes from it fits with the world we live in. If you believe that aliens are our creators and will pick us up in their space crafts sometime soon, you may miss out on the wonders of astronomy. If you believe that a higher power created the world 10,000 years ago along with all life in their kinds, you miss out on the wonders of life itself, evolution, biology, genetics, and psychology. Belief grounded in reality breeds the best kind of meaning.[/cut]